Qwen3-Max-Thinking state-of-the-art reasoning model at your fingertips!

Qwen/

Qwen3-Coder-480B-A35B-Instruct

$0.40

in

$1.60

out

/ 1M tokens

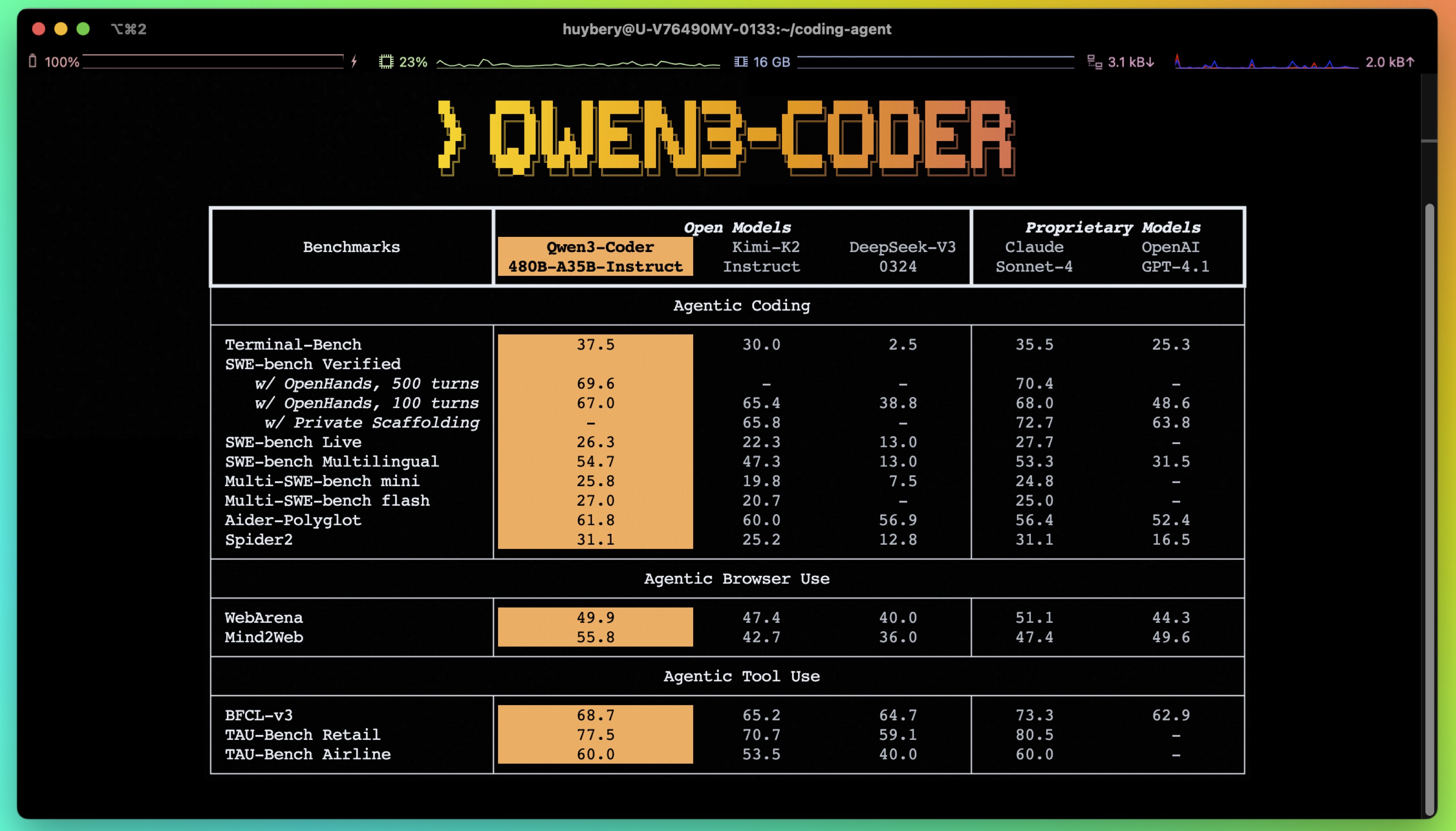

Qwen3-Coder-480B-A35B-Instruct is the Qwen3's most agentic code model, featuring Significant Performance on Agentic Coding, Agentic Browser-Use and other foundational coding tasks, achieving results comparable to Claude Sonnet.

Qwen3-Coder-480B-A35B-Instruct

Ask me anything

Settings

Qwen3-Coder-480B-A35B-Instruct is the Qwen3's most agentic code model to date. Qwen3-Coder is available in multiple sizes, and Qwen3-Coder-480B-A35B-Instruct is its most powerful variant, featuring the following key enhancements:

- Significant Performance among open models on Agentic Coding, Agentic Browser-Use, and other foundational coding tasks, achieving results comparable to Claude Sonnet.

- Long-context Capabilities with native support for 256K tokens, extendable up to 1M tokens using Yarn, optimized for repository-scale understanding.

- Agentic Coding supporting for most platfrom such as Qwen Code, CLINE, featuring a specially designed function call format.

Model Overview

Qwen3-480B-A35B-Instruct has the following features:

- Type: Causal Language Models

- Training Stage: Pretraining & Post-training

- Number of Parameters: 480B in total and 35B activated

- Number of Layers: 62

- Number of Attention Heads (GQA): 96 for Q and 8 for KV

- Number of Experts: 160

- Number of Activated Experts: 8

- Context Length: 262,144 natively.

NOTE: This model supports only non-thinking mode and does not generate **\<think>****\</think>** blocks in its output. Meanwhile, specifying enable_thinking=False is no longer required.

For more details, including benchmark evaluation, hardware requirements, and inference performance, please refer to our blog, GitHub, and Documentation.

© 2026 Deep Infra. All rights reserved.