Qwen3-Max-Thinking state-of-the-art reasoning model at your fingertips!

Flan-UL2 is probably the best open source model available right now for chatbots. In this post we will show you how to get started with it very easily. Flan-UL2 is large - 20B parameters. It is fine tuned version of the UL2 model using Flan dataset. Because this is quite a large model it is not easy to deploy it on your own machine. If you rent a GPU in AWS, it will cost you around $1.5 per hour or $1080 per month. Using DeepInfra model deployments you only pay for the inference time, and we do not charge for cold starts. Our pricing is $0.0005 per second of running inference on Nvidia A100. Which translates to about $0.0001 per token generated by Flan-UL2.

Also check out the model page https://deepinfra.com/google/flan-ul2. You can

run inferences, check the docs/API for running inferences via curl.

Getting started

First, you'll need to get an API key from the DeepInfra dashboard.

- Sign up or log in to your DeepInfra account

- Navigate to the API Keys section in the dashboard

- Create a new API key for authentication

Deployment

You can deploy the google/flan-ul2 model easily through the web dashboard or API. The model will be automatically deployed when you first make an inference request.

Inference

You can use it with our REST API. Here's how to use it with curl:

curl -X POST \

-d '{"prompt": "Hello, how are you?"}' \

-H 'Content-Type: application/json' \

-H "Authorization: Bearer YOUR_API_KEY" \

'https://api.deepinfra.com/v1/inference/google/flan-ul2'

To see the full documentation of how to call this model, check out the model page on the DeepInfra website or the API documentation.

If you want a list of all the models you can use on DeepInfra, you can visit the models page on our website or use the API to get a list of available models.

There is no easier way to get started with arguably one of the best open source LLM. This was quite easy right? You did not have to deal with docker, transformers, pytorch, etc. If you have any question, just reach out to us on our Discord server.

Build a Streaming Chat Backend in 10 Minutes<p>When large language models move from demos into real systems, expectations change. The goal is no longer to produce clever text, but to deliver predictable latency, responsive behavior, and reliable infrastructure characteristics. In chat-based systems, especially, how fast a response starts often matters more than how fast it finishes. This is where token streaming becomes […]</p>

Build a Streaming Chat Backend in 10 Minutes<p>When large language models move from demos into real systems, expectations change. The goal is no longer to produce clever text, but to deliver predictable latency, responsive behavior, and reliable infrastructure characteristics. In chat-based systems, especially, how fast a response starts often matters more than how fast it finishes. This is where token streaming becomes […]</p>

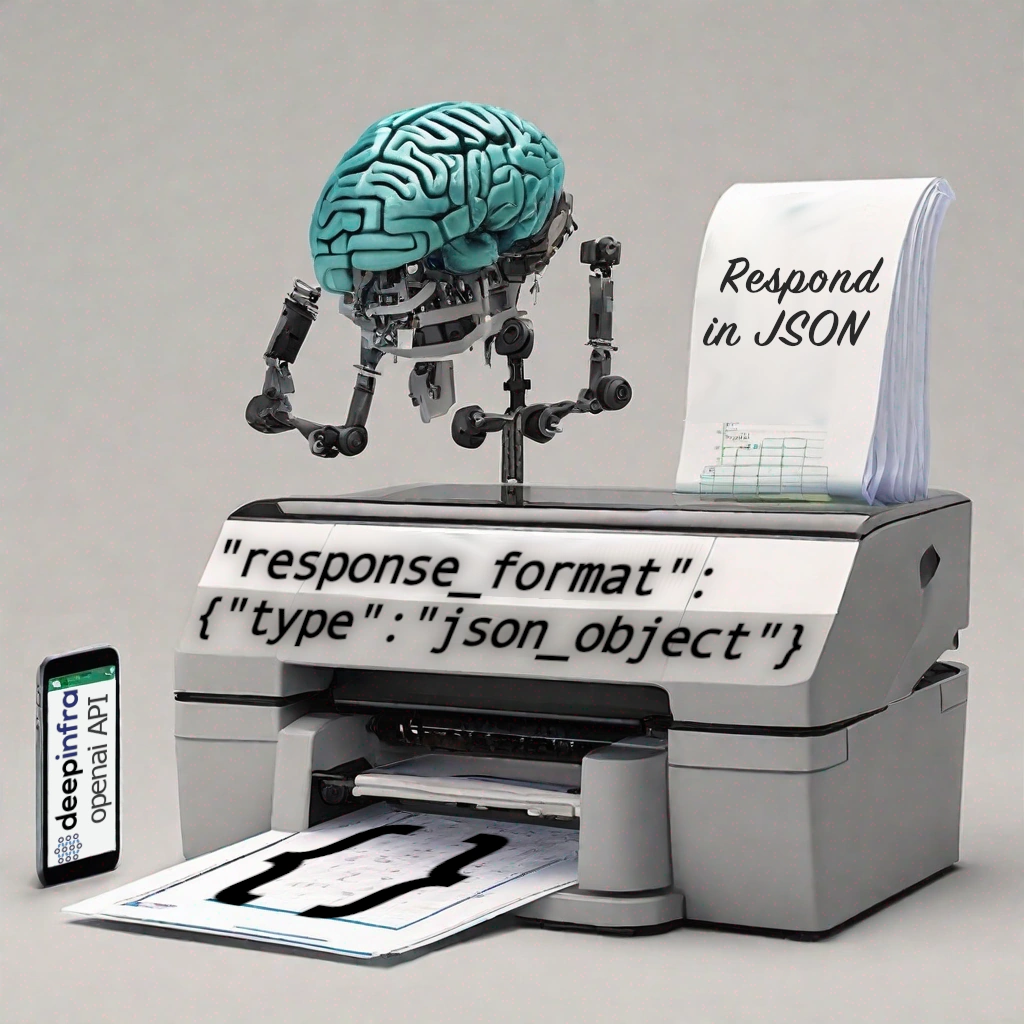

Guaranteed JSON output on Open-Source LLMs.DeepInfra is proud to announce that we have released "JSON mode" across all of our text language models. It is available through the "response_format" object, which currently supports only {"type": "json_object"}

Our JSON mode will guarantee that all tokens returned in the output of a langua...

Guaranteed JSON output on Open-Source LLMs.DeepInfra is proud to announce that we have released "JSON mode" across all of our text language models. It is available through the "response_format" object, which currently supports only {"type": "json_object"}

Our JSON mode will guarantee that all tokens returned in the output of a langua... The easiest way to build AI applications with Llama 2 LLMs.The long awaited Llama 2 models are finally here!

We are excited to show you how to use them with DeepInfra. These collection of models represent

the state of the art in open source language models.

They are made available by Meta AI and the l...

The easiest way to build AI applications with Llama 2 LLMs.The long awaited Llama 2 models are finally here!

We are excited to show you how to use them with DeepInfra. These collection of models represent

the state of the art in open source language models.

They are made available by Meta AI and the l...

© 2026 Deep Infra. All rights reserved.