Qwen3-Max-Thinking state-of-the-art reasoning model at your fingertips!

We're excited to announce that the Function Calling feature is now available on DeepInfra. We're offering Mistral-7B and Mixtral-8x7B models with this feature. Other models will be available soon.

LLM models are powerful tools for various tasks. However, they're limited in their ability to perform the tasks that require external knowledge. The function calling feature greatly enhances the ability of LLMs. It allows models to call external functions provided by the user, and use the results to provide a comprehensive response for the user query.

This feature was first introduced by OpenAI. The open-source LLMs are slowly catching up with external tool calling functionalities. DeepInfra is committed to closing this gap and making it available for a lower price.

In this article, we'll explain how this feature works, and how to use it.

Understanding the function calling

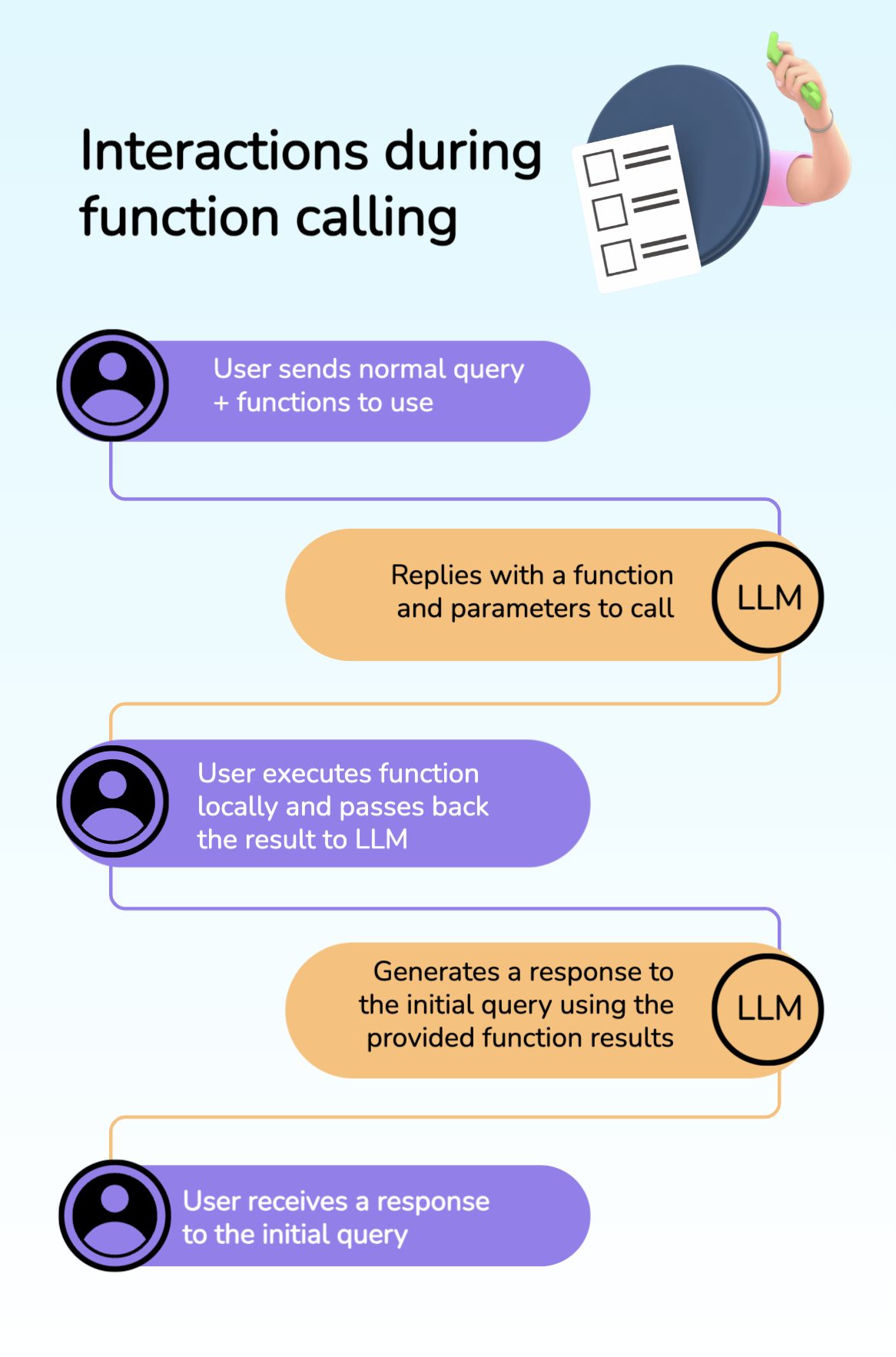

Here you can see the high-level interaction flow during function calling.

Pros:

- User can supply any kind of functions to the model (aka. Assistant). The Assistant will be useful, as long as the descriptions of the functions are well-written.

Cons:

- User (most likely Developer) needs to write some code, or have a way to execute the function with the parameters that the model decided to call.

During the interaction the LLM model might ask clarifying questions in order to execute functions with the right arguments.

Use cases

Here are just a few example use cases that can benefit from Function Calling capability of LLMs:

- Real-time data processor: you can provide a function that accesses the real-time data and does some actions

- Math Problem Solver: it can be used to solve complex math problems (e.g. by providing a function that talks to WolframAlpha or other third-party tools)

- AI Virtual Assistants: you can enhance your AI assistant with various functionalities that the LLM model can call and respond back meaningfully to the end user

Now, let's consider a concrete example.

Example with code

Let's consider this example:

- User has a function

get_current_weather(location) - User wants to find out the city with the hottest weather.

Here is the user prompt

messages = [

{

"role": "user",

"content": "Which city has the hottest weather today: San Francisco, Tokyo, or Paris?"

}

]

Here is the definition of our function

tools = [{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description":

"The city and state, e.g. San Francisco, CA"

}

},

"required": ["location"]

},

}

}]

Let's send the request and print the response

import openai

client = openai.OpenAI(

base_url="https://api.deepinfra.com/v1/openai",

api_key="<Your-DeepInfra-API-Key>",

)

response = client.chat.completions.create(

model="mistralai/Mistral-7B-Instruct-v0.1",

messages=messages,

tools=tools,

tool_choice="auto",

)

tool_calls = response.choices[0].message.tool_calls

for tool_call in tool_calls:

print(tool_call.model_dump())

The model asked to call 3 functions. Output:

{'id': 'call_06ONYZC3ptjxNBxURGzoEhN6', 'function': {'arguments': '{"location": "San Francisco, CA"}', 'name': 'get_current_weather'}, 'type': 'function'}

{'id': 'call_dMcwnJns2GZ2WiKagTwHVCBk', 'function': {'arguments': '{"location": "Tokyo, Japan"}', 'name': 'get_current_weather'}, 'type': 'function'}

{'id': 'call_CUK78ZZy5JLgtuWA076r94L6', 'function': {'arguments': '{"location": "Paris, France"}', 'name': 'get_current_weather'}, 'type': 'function'}

This is the dummy function that we will use in this example.

import json

# Example dummy function hard coded to return the same weather

# In production, this could be your backend API or an external API

def get_current_weather(location):

"""Get the current weather in a given location"""

print("Calling get_current_weather client side.")

if "tokyo" in location.lower():

return json.dumps({

"location": "Tokyo",

"temperature": "75"

})

elif "san francisco" in location.lower():

return json.dumps({

"location": "San Francisco",

"temperature": "60"

})

elif "paris" in location.lower():

return json.dumps({

"location": "Paris",

"temperature": "70"

})

else:

return json.dumps({"location": location, "temperature": "unknown"})

Now let's execute the functions and pass back the results.

# extend conversation with assistant's reply

messages.append(response.choices[0].message)

for tool_call in tool_calls:

function_name = tool_call.function.name

if function_name == "get_current_weather":

function_args = json.loads(tool_call.function.arguments)

function_response = get_current_weather(

location=function_args.get("location")

)

# extend conversation with function response

messages.append({

"tool_call_id": tool_call.id,

"role": "tool",

"content": function_response,

})

# get a new response from the model where it can see the function responses

second_response = client.chat.completions.create(

model="mistralai/Mistral-7B-Instruct-v0.1",

messages=messages,

)

print(second_response.choices[0].message.content)

Here is the output.

Based on the current weather data, Tokyo has the hottest weather today with a temperature of 75°F (24°C).

You can see that the model even understood that the temperature is on Fahrenheit, and even converted to Celsius on its own. This type of response makes LLMs even more attractive to use function calling feature.

Conclusion

We're excited to bring the Function Calling feature to our platform. Try it out with our Function Calling Guide.

We believe that it will help developers build powerful tools and applications. Your feedback is more than welcome.

Join our Discord, Twitter for future updates.

Have fun!

How to deploy Databricks Dolly v2 12b, instruction tuned casual language model.Databricks Dolly is instruction tuned 12 billion parameter casual language model based on EleutherAI's pythia-12b.

It was pretrained on The Pile, GPT-J's pretraining corpus.

[databricks-dolly-15k](http...

How to deploy Databricks Dolly v2 12b, instruction tuned casual language model.Databricks Dolly is instruction tuned 12 billion parameter casual language model based on EleutherAI's pythia-12b.

It was pretrained on The Pile, GPT-J's pretraining corpus.

[databricks-dolly-15k](http... Best API for Kimi K2.5: Why DeepInfra Leads in Speed, TTFT, and Scalability<p>Kimi K2.5 is positioned as Moonshot AI’s “do-it-all” model for modern product workflows: native multimodality (text + vision/video), Instant vs. Thinking modes, and support for agentic / multi-agent (“swarm”) execution patterns. In real applications, though, model capability is only half the story. The provider’s inference stack determines the things your users actually feel: time-to-first-token (TTFT), […]</p>

Best API for Kimi K2.5: Why DeepInfra Leads in Speed, TTFT, and Scalability<p>Kimi K2.5 is positioned as Moonshot AI’s “do-it-all” model for modern product workflows: native multimodality (text + vision/video), Instant vs. Thinking modes, and support for agentic / multi-agent (“swarm”) execution patterns. In real applications, though, model capability is only half the story. The provider’s inference stack determines the things your users actually feel: time-to-first-token (TTFT), […]</p>

How to deploy google/flan-ul2 - simple. (open source ChatGPT alternative)Flan-UL2 is probably the best open source model available right now for chatbots. In this post

we will show you how to get started with it very easily. Flan-UL2 is large -

20B parameters. It is fine tuned version of the UL2 model using Flan dataset.

Because this is quite a large model it is not eas...

How to deploy google/flan-ul2 - simple. (open source ChatGPT alternative)Flan-UL2 is probably the best open source model available right now for chatbots. In this post

we will show you how to get started with it very easily. Flan-UL2 is large -

20B parameters. It is fine tuned version of the UL2 model using Flan dataset.

Because this is quite a large model it is not eas...

© 2026 Deep Infra. All rights reserved.