Qwen3-Max-Thinking state-of-the-art reasoning model at your fingertips!

Double exposure is a photography technique that combines multiple images into a single frame, creating a dreamlike and artistic effect. With the advent of AI image generation, we can now create stunning double exposure art in minutes using LoRA models. In this guide, we'll walk through how to use the Flux Double Exposure Magic LoRA from CivitAI with DeepInfra's deployment platform.

What You'll Need

- A CivitAI account (free)

- A DeepInfra account (free)

Set Up a LoRA model

- Log in to your DeepInfra account

- Navigate to the Deployments section

- Click the "New Deployment" button in the top right corner

- Select "LoRA text to image" from the options

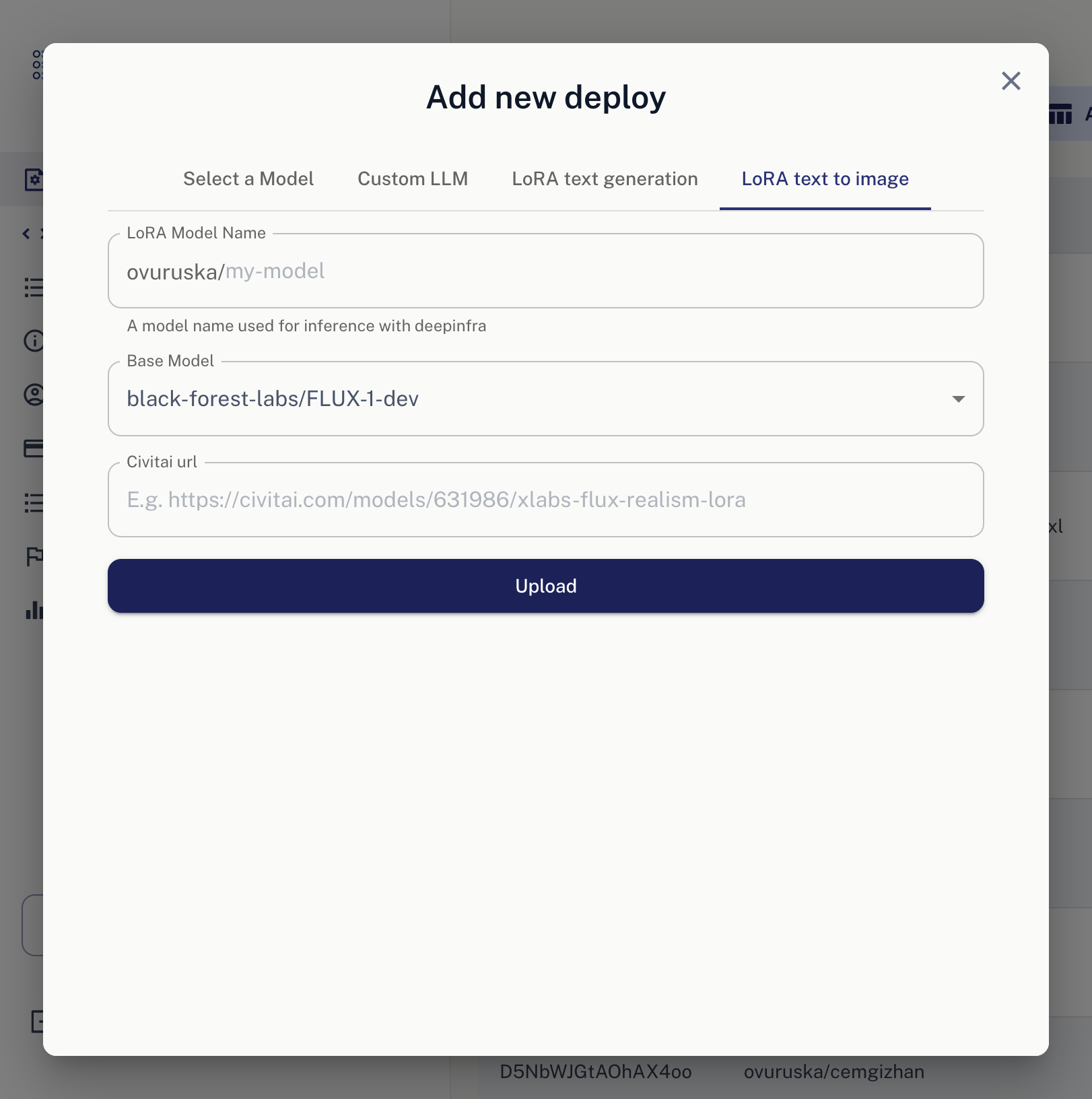

Once you navigate to this section, you will see a screen like this:

5. Write your preferred model name.

6. We'll use FLUX Dev for this LoRA. You can keep it as it is.

7. Add the following CivitAI URL: https://civitai.com/models/715497/flux-double-exposure-magic?modelVersionId=859666

8. Click "Upload" button, and that's it. VOILA!

5. Write your preferred model name.

6. We'll use FLUX Dev for this LoRA. You can keep it as it is.

7. Add the following CivitAI URL: https://civitai.com/models/715497/flux-double-exposure-magic?modelVersionId=859666

8. Click "Upload" button, and that's it. VOILA!

Once LoRA processing has completed, you should navigate to

http://deepinfra.com/<your_name>/<lora_name>

When you have navigated, you should view our classical dashboard, but with your LoRA name.

An Example: Cyberpunk Double Exposure

Now let's create some stunning visuals... Let's break down this stunning example:

bo-exposure, double exposure, cyberpunk city, robot face

Key Takeaway ⚠️

Notice how we use BOTH bo-exposure and double exposure. This combination is crucial - using both terms together gives you the best double exposure effect.

More tutorials are on the way. See you in the next one 👋

Power the Next Era of Image Generation with FLUX.2 Visual Intelligence on DeepInfraDeepInfra is excited to support FLUX.2 from day zero, bringing the newest visual intelligence model from Black Forest Labs to our platform at launch. We make it straightforward for developers, creators, and enterprises to run the model with high performance, transparent pricing, and an API designed for productivity.

Power the Next Era of Image Generation with FLUX.2 Visual Intelligence on DeepInfraDeepInfra is excited to support FLUX.2 from day zero, bringing the newest visual intelligence model from Black Forest Labs to our platform at launch. We make it straightforward for developers, creators, and enterprises to run the model with high performance, transparent pricing, and an API designed for productivity. How to deploy Databricks Dolly v2 12b, instruction tuned casual language model.Databricks Dolly is instruction tuned 12 billion parameter casual language model based on EleutherAI's pythia-12b.

It was pretrained on The Pile, GPT-J's pretraining corpus.

[databricks-dolly-15k](http...

How to deploy Databricks Dolly v2 12b, instruction tuned casual language model.Databricks Dolly is instruction tuned 12 billion parameter casual language model based on EleutherAI's pythia-12b.

It was pretrained on The Pile, GPT-J's pretraining corpus.

[databricks-dolly-15k](http...

© 2026 Deep Infra. All rights reserved.