Qwen3-Max-Thinking state-of-the-art reasoning model at your fingertips!

Delivering AI at scale requires multiple things working together: open-weight models, powerful hardware, and inference optimizations. DeepInfra was among the first to deploy production workloads on the NVIDIA Blackwell platform — and we're seeing up to 20x cost reductions when combining Mixture of Experts (MoE) architectures with Blackwell optimizations. Here's what we've built and learned.

Optimizing Inference on NVIDIA Blackwell

The NVIDIA Blackwell platform delivers a step change in AI inference performance. DeepInfra has built a comprehensive optimization stack to take full advantage of these capabilities.

The gains come from three layers working together: hardware acceleration from the NVIDIA Blackwell architecture, efficiency from open-weight MoE models, and DeepInfra's inference optimizations built on NVIDIA TensorRT-LLM, including speculative decoding and advanced memory management.

Layers of inference optimizations.

MoE + NVIDIA Blackwell + Lower Precision: The Full Stack

The real gains come from combining multiple optimizations. Here's what that looks like comparing a dense 405B model to an MoE model with 400B total parameters but only 17B active per token:

| Dense 405B Model | MoE 400B (FP8) Model | MoE 400B (FP8) Model | MoE 400B (NVFP4) Model | |

|---|---|---|---|---|

| Platform | NVIDIA H200 | NVIDIA H200 | NVIDIA HGX™ B200 | NVIDIA HGX™ B200 |

| Cost/1M tokens | $1.00 | $0.20 | $0.10 | $0.05 |

| Cost vs Dense | baseline | 5x lower cost | 10x lower cost | 20x lower cost |

From $1.00/1M tokens (Dense 405B) to $0.05/1M tokens (MoE NVFP4 on NVIDIA Blackwell) — 20x more cost effective.

20x cheaper than dense. Similar parameter scale, fraction of the cost.

Each layer compounds: MoE architecture reduces active compute, the Blackwell platform accelerates throughput, with NVFP4 quantization cutting memory and compute further.

Multi-Token Prediction and Speculative Decoding

Traditional autoregressive generation produces one token at a time. DeepInfra leverages Multi-Token Prediction (MTP) and Eagle speculative decoding to accelerate generation. By predicting multiple tokens simultaneously and verifying them in parallel, these techniques improve throughput on supported models.

Advanced Memory Management

Beyond the core optimizations, DeepInfra implements:

- KV-cache-aware routing: Intelligently directing requests to maximize cache reuse across the cluster

- Off-GPU KV cache offloading: Extending effective context length by leveraging system memory

- Adaptive parallelism strategies: Dynamically selecting tensor, pipeline, or expert parallelism based on model architecture and request characteristics

In Production: Latitude

What does this look like in practice? For Latitude, AI is the experience.

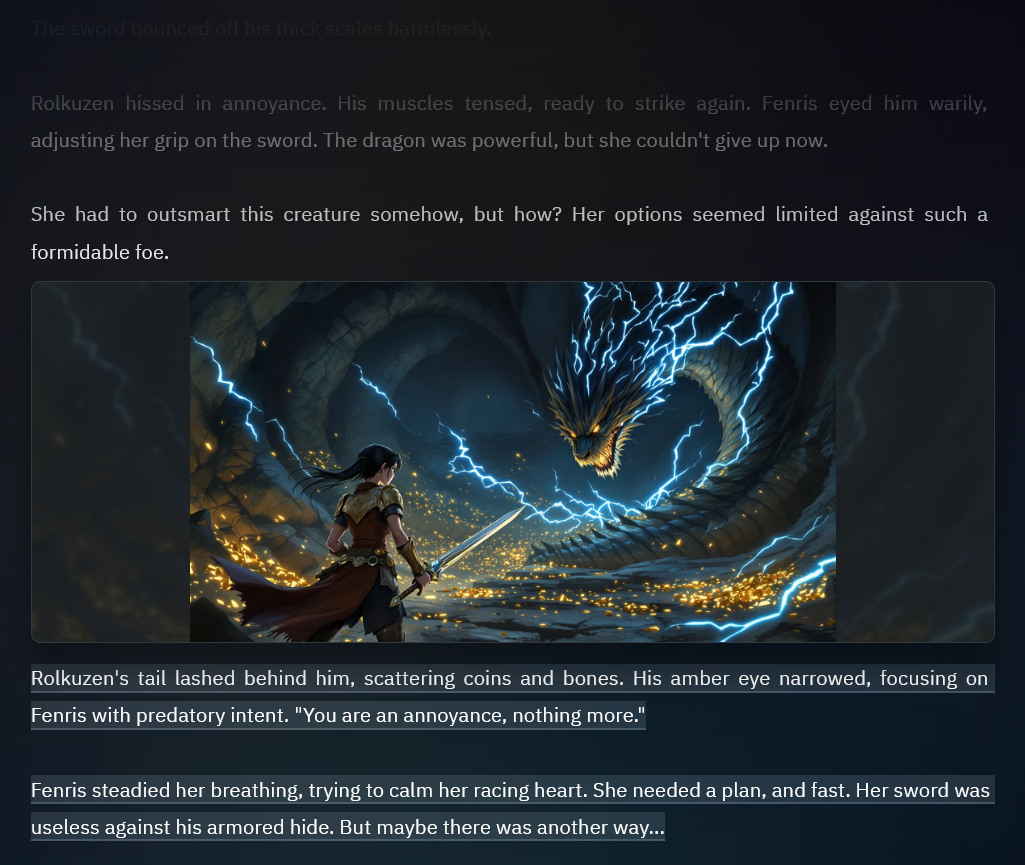

AI Dungeon serves 1.5 million monthly active users generating dynamic, AI-powered game narratives, and Latitude is expanding with Voyage, an upcoming AI RPG platform where players can create or play worlds with full freedom of action. Model responses aren't just part of the gameplay loop — they're the centerpiece of everything Latitude builds. Every improvement in model performance directly translates to better player experiences, higher engagement and retention, and ultimately drives revenue and growth.

AI Dungeon generates both narrative text and imagery in real-time as players explore dynamic stories.

AI Dungeon generates both narrative text and imagery in real-time as players explore dynamic stories.

Running large open-source MoE models on DeepInfra's Blackwell-based platform allows Latitude to deliver fast, reliable responses at a cost that scales with their player base.

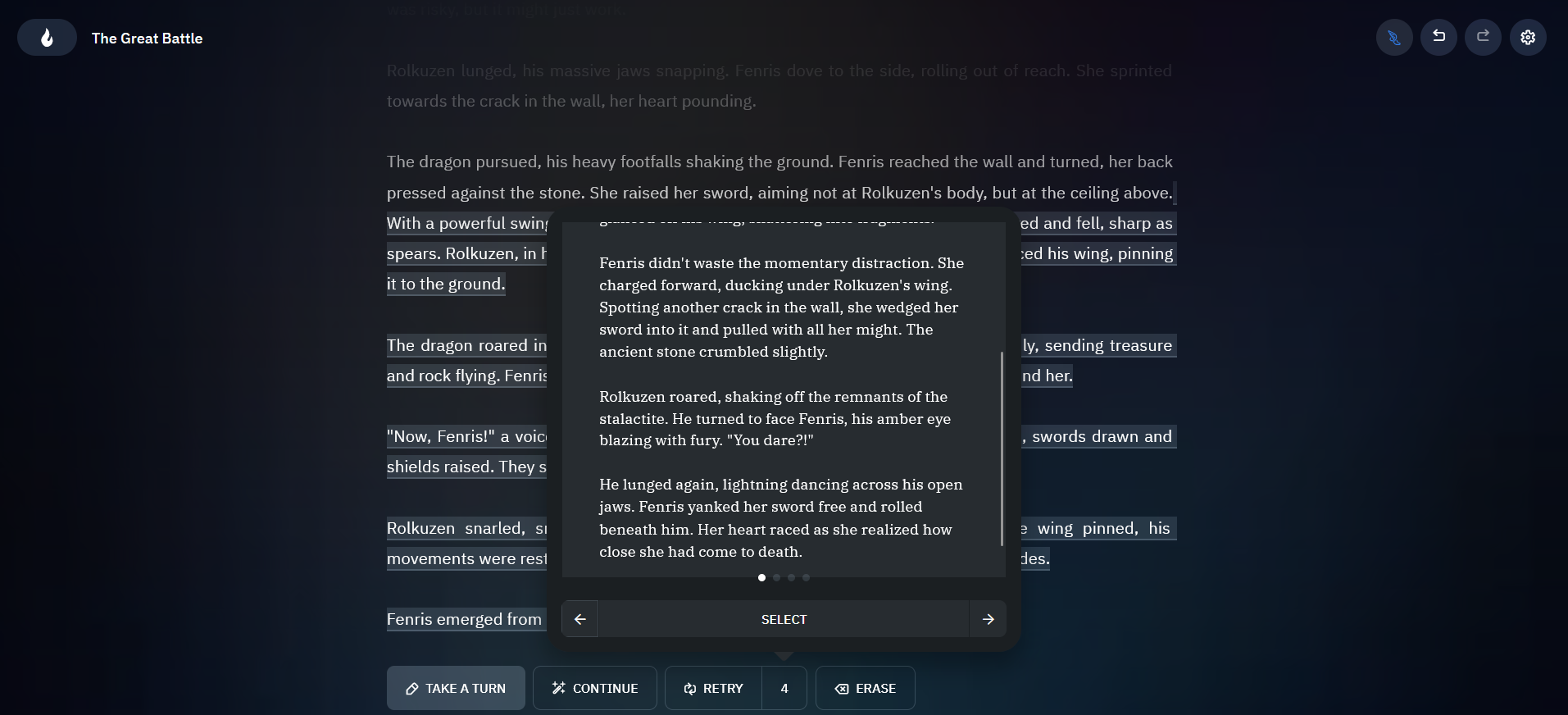

Players can choose from multiple AI-generated continuations, each requiring real-time inference.

Players can choose from multiple AI-generated continuations, each requiring real-time inference.

"For AI Dungeon, the model response is the game. Every millisecond of latency, every quality improvement matters — it directly impacts how players feel, how long they stay, and whether they come back. DeepInfra on NVIDIA Blackwell gives us the performance we need at a cost that actually works at scale."

— Nick Walton, CEO, Latitude

The Open Model Advantage

A key factor in Latitude's success is the flexibility that open-weight models provide. Rather than being locked into a single model, they can evaluate and deploy the best model for each specific use case — whether optimizing for creative storytelling, fast responses, or cost efficiency.

DeepInfra's platform supports this experimentation by offering a broad catalog of optimized open-source models, all benefiting from the NVIDIA Blackwell platform performance gains. Customers like Latitude can test different models, compare performance characteristics, and deploy the optimal configuration for their workload — without infrastructure overhead.

What's Next

The combination of the NVIDIA Blackwell platform, DeepInfra's inference optimizations, and the flexibility of open-weight models creates a powerful platform for AI-native applications. As models continue to grow in capability and efficiency, this infrastructure foundation will enable the next generation of AI experiences.

For developers and companies looking to reduce inference costs, the path forward is clear: modern hardware, purpose-built optimizations, and the freedom to choose the right model for the job.

To learn more about DeepInfra's Blackwell deployment or discuss your inference needs, visit deepinfra.com.

Technical References

Langchain improvements: async and streamingStarting from langchain

v0.0.322 you

can make efficient async generation and streaming tokens with deepinfra.

Async generation

The deepinfra wrapper now supports native async calls, so you can expect more

performance (no more t...

Langchain improvements: async and streamingStarting from langchain

v0.0.322 you

can make efficient async generation and streaming tokens with deepinfra.

Async generation

The deepinfra wrapper now supports native async calls, so you can expect more

performance (no more t... Build a Streaming Chat Backend in 10 Minutes<p>When large language models move from demos into real systems, expectations change. The goal is no longer to produce clever text, but to deliver predictable latency, responsive behavior, and reliable infrastructure characteristics. In chat-based systems, especially, how fast a response starts often matters more than how fast it finishes. This is where token streaming becomes […]</p>

Build a Streaming Chat Backend in 10 Minutes<p>When large language models move from demos into real systems, expectations change. The goal is no longer to produce clever text, but to deliver predictable latency, responsive behavior, and reliable infrastructure characteristics. In chat-based systems, especially, how fast a response starts often matters more than how fast it finishes. This is where token streaming becomes […]</p>

Use OpenAI API clients with LLaMasGetting started

# create a virtual environment

python3 -m venv .venv

# activate environment in current shell

. .venv/bin/activate

# install openai python client

pip install openai

Choose a model

meta-llama/Llama-2-70b-chat-hf

[meta-llama/L...

Use OpenAI API clients with LLaMasGetting started

# create a virtual environment

python3 -m venv .venv

# activate environment in current shell

. .venv/bin/activate

# install openai python client

pip install openai

Choose a model

meta-llama/Llama-2-70b-chat-hf

[meta-llama/L...

© 2026 Deep Infra. All rights reserved.