We use essential cookies to make our site work. With your consent, we may also use non-essential cookies to improve user experience and analyze website traffic…

Qwen3-Max-Thinking state-of-the-art reasoning model at your fingertips!

Did you just finetune your favorite model and are wondering where to run it? Well, we have you covered. Simple API and predictable pricing.

Put your model on huggingface

Use a private repo, if you wish, we don't mind. Create a hf access token just for the repo for better security.

Create custom deployment

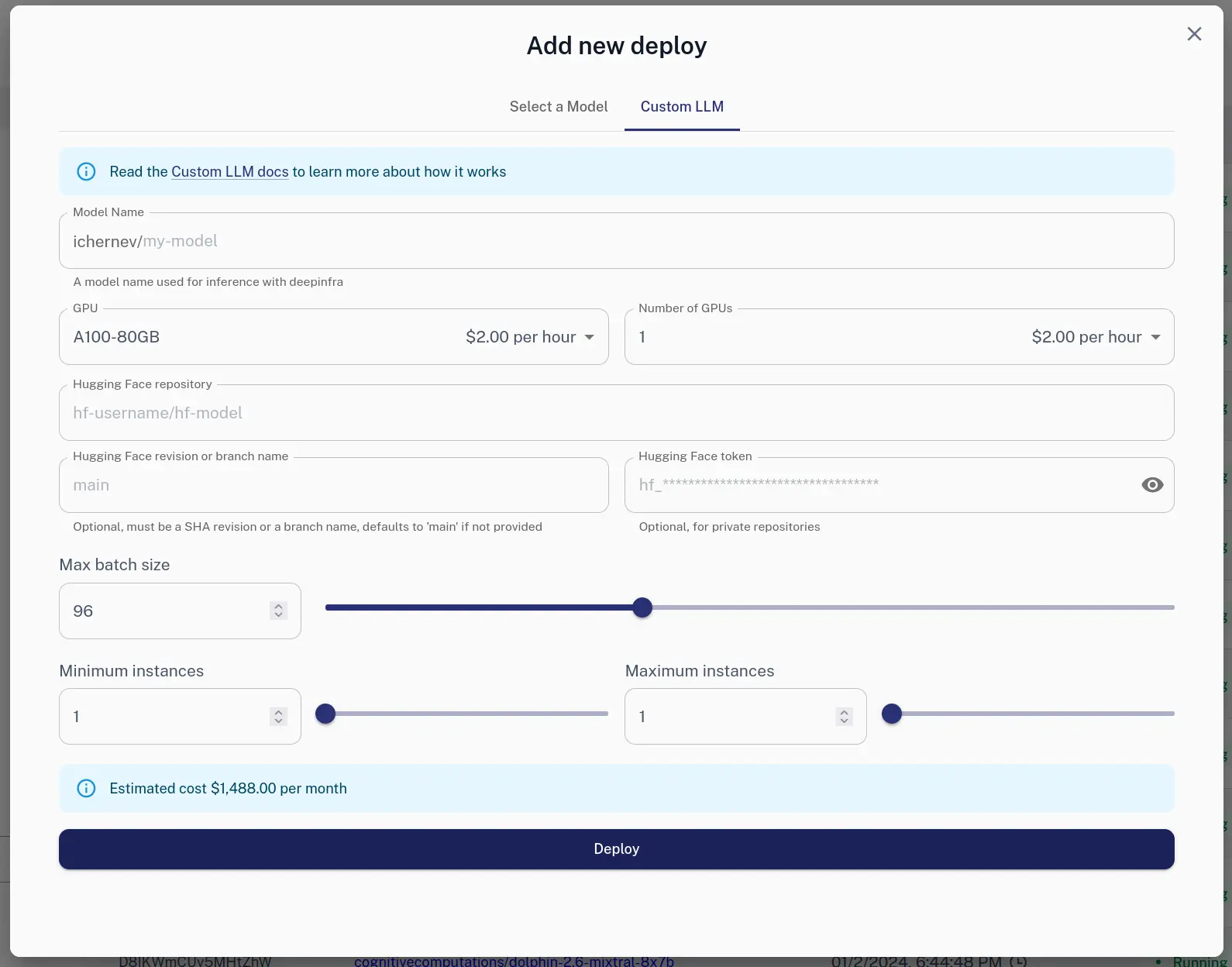

Via Web

You can use the Web UI to create a new deployment.

Via HTTP

We also offer HTTP API:

curl -X POST https://api.deepinfra.com/deploy/llm -d '{

"model_name": "test-model",

"gpu": "A100-80GB",

"num_gpus": 2,

"max_batch_size": 64,

"hf": {

"repo": "meta-llama/Llama-2-7b-chat-hf"

},

"settings": {

"min_instances": 1,

"max_instances": 1,

}

}' -H 'Content-Type: application/json' \

-H "Authorization: Bearer YOUR_API_KEY"

Use it

curl -X POST \

-d '{"input": "Hello"}' \

-H 'Content-Type: application/json' \

-H "Authorization: Bearer YOUR_API_KEY" \

'https://api.deepinfra.com/v1/inference/github-username/di-model-name'

For in depth tutorial check Custom LLM Docs.

Related articles Seed Anchoring and Parameter Tweaking with SDXL Turbo: Create Stunning Cubist ArtIn this blog post, we're going to explore how to create stunning cubist art using SDXL Turbo using some advanced image generation techniques.

Seed Anchoring and Parameter Tweaking with SDXL Turbo: Create Stunning Cubist ArtIn this blog post, we're going to explore how to create stunning cubist art using SDXL Turbo using some advanced image generation techniques. Best API for Kimi K2.5: Why DeepInfra Leads in Speed, TTFT, and Scalability<p>Kimi K2.5 is positioned as Moonshot AI’s “do-it-all” model for modern product workflows: native multimodality (text + vision/video), Instant vs. Thinking modes, and support for agentic / multi-agent (“swarm”) execution patterns. In real applications, though, model capability is only half the story. The provider’s inference stack determines the things your users actually feel: time-to-first-token (TTFT), […]</p>

Best API for Kimi K2.5: Why DeepInfra Leads in Speed, TTFT, and Scalability<p>Kimi K2.5 is positioned as Moonshot AI’s “do-it-all” model for modern product workflows: native multimodality (text + vision/video), Instant vs. Thinking modes, and support for agentic / multi-agent (“swarm”) execution patterns. In real applications, though, model capability is only half the story. The provider’s inference stack determines the things your users actually feel: time-to-first-token (TTFT), […]</p>

From Precision to Quantization: A Practical Guide to Faster, Cheaper LLMs<p>Large language models live and die by numbers—literally trillions of them. How finely we store those numbers (their precision) determines how much memory a model needs, how fast it runs, and sometimes how good its answers are. This article walks from the basics to the deep end: we’ll start with how computers even store a […]</p>

From Precision to Quantization: A Practical Guide to Faster, Cheaper LLMs<p>Large language models live and die by numbers—literally trillions of them. How finely we store those numbers (their precision) determines how much memory a model needs, how fast it runs, and sometimes how good its answers are. This article walks from the basics to the deep end: we’ll start with how computers even store a […]</p>

Seed Anchoring and Parameter Tweaking with SDXL Turbo: Create Stunning Cubist ArtIn this blog post, we're going to explore how to create stunning cubist art using SDXL Turbo using some advanced image generation techniques.

Seed Anchoring and Parameter Tweaking with SDXL Turbo: Create Stunning Cubist ArtIn this blog post, we're going to explore how to create stunning cubist art using SDXL Turbo using some advanced image generation techniques. Best API for Kimi K2.5: Why DeepInfra Leads in Speed, TTFT, and Scalability<p>Kimi K2.5 is positioned as Moonshot AI’s “do-it-all” model for modern product workflows: native multimodality (text + vision/video), Instant vs. Thinking modes, and support for agentic / multi-agent (“swarm”) execution patterns. In real applications, though, model capability is only half the story. The provider’s inference stack determines the things your users actually feel: time-to-first-token (TTFT), […]</p>

Best API for Kimi K2.5: Why DeepInfra Leads in Speed, TTFT, and Scalability<p>Kimi K2.5 is positioned as Moonshot AI’s “do-it-all” model for modern product workflows: native multimodality (text + vision/video), Instant vs. Thinking modes, and support for agentic / multi-agent (“swarm”) execution patterns. In real applications, though, model capability is only half the story. The provider’s inference stack determines the things your users actually feel: time-to-first-token (TTFT), […]</p>

From Precision to Quantization: A Practical Guide to Faster, Cheaper LLMs<p>Large language models live and die by numbers—literally trillions of them. How finely we store those numbers (their precision) determines how much memory a model needs, how fast it runs, and sometimes how good its answers are. This article walks from the basics to the deep end: we’ll start with how computers even store a […]</p>

From Precision to Quantization: A Practical Guide to Faster, Cheaper LLMs<p>Large language models live and die by numbers—literally trillions of them. How finely we store those numbers (their precision) determines how much memory a model needs, how fast it runs, and sometimes how good its answers are. This article walks from the basics to the deep end: we’ll start with how computers even store a […]</p>

© 2026 Deep Infra. All rights reserved.